Robots.txt

Monitoring & Alerts

Never miss a robots.txt change. Monitor crawler access rules, sitemap declarations, and SEO directives with automatic alerts when your robots.txt file is modified. Daily checks.

No credit card required

Monitor Your Robots.txt in 3 Simple Steps

Add Your Domain

Enter your website domain and we'll automatically detect and start monitoring your robots.txt file.

Automatic Monitoring

We check your robots.txt daily for any changes in directives, sitemaps, or crawler access rules.

Instant Alerts

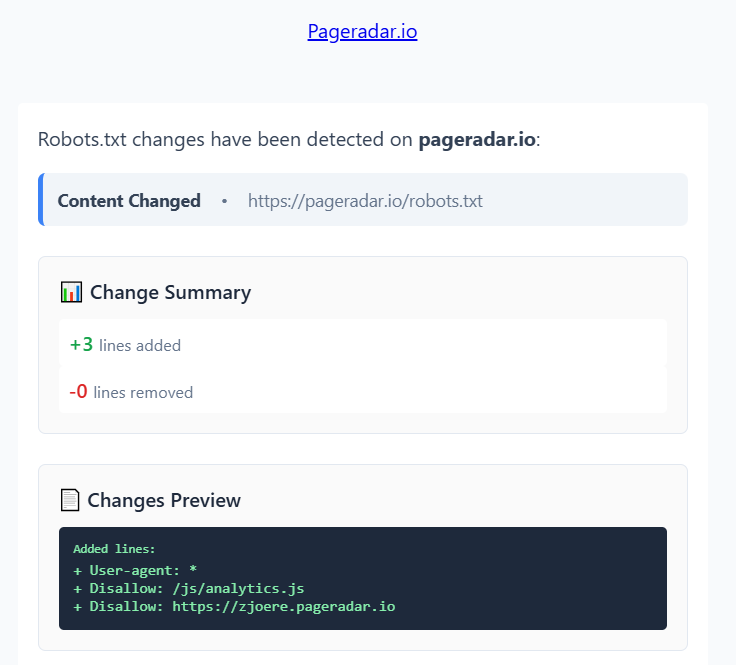

Get email notifications with detailed change diff when your robots.txt is modified.

Complete Robots.txt Monitoring Solution

Professional monitoring tool designed to catch every robots.txt change.

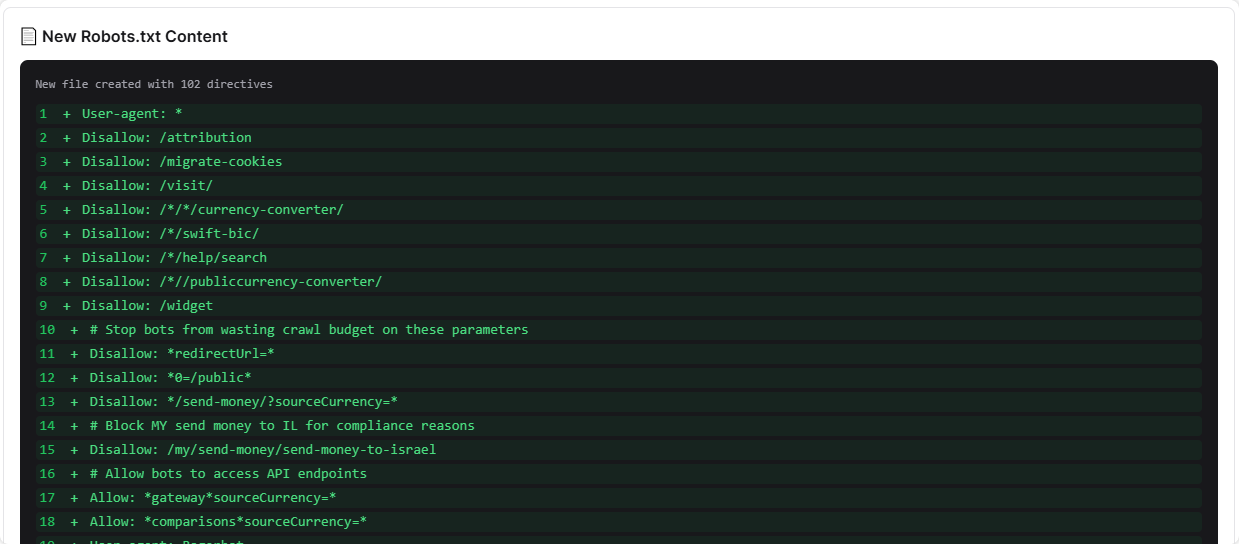

Detailed Change Notifications

Get instant email alerts with visual diff showing exactly what changed in your robots.txt. See added directives, removed rules, and modified settings.

- Visual diff comparisons

- Directive-level change analysis

- Instant email notifications

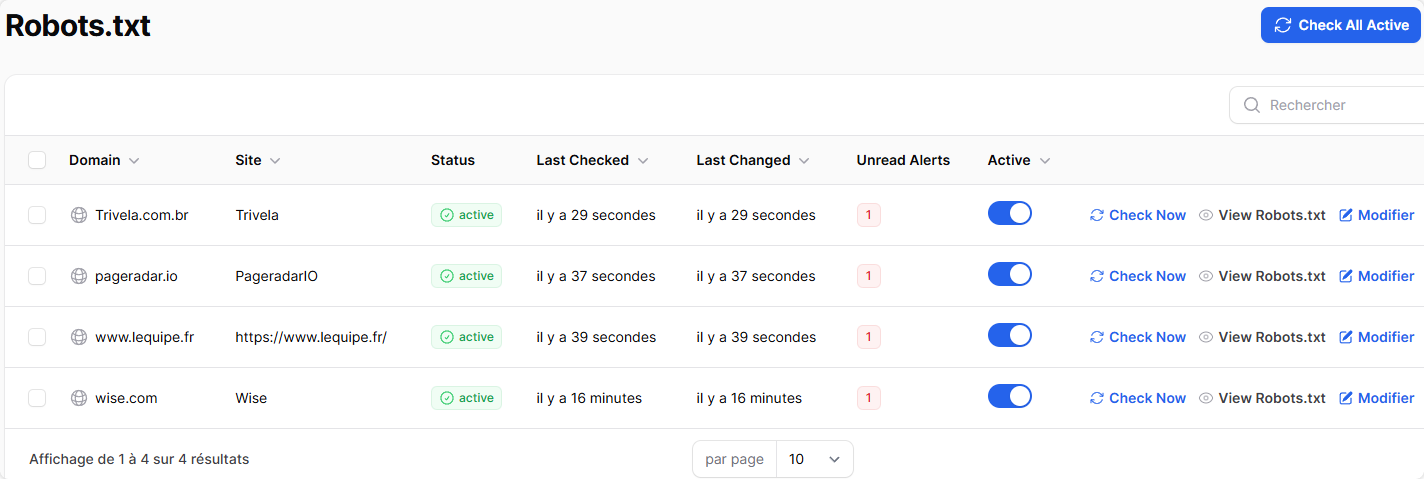

Monitor Multiple Websites

Centralized robots.txt monitoring across all your websites. Perfect for agencies, e-commerce networks, site migrations, or businesses with multiple domains.

- Unlimited domains

- Centralized dashboard

- Custom check frequencies

Perfect for websites with good SEO rankings

E-commerce Sites

Protect product category indexing and prevent accidental blocking of shopping crawlers like Google Shopping.

Content Websites

Monitor sitemap declarations and ensure your blog posts and articles remain accessible to search engines.

Enterprise Websites

Track changes across multiple domains and prevent accidental blocking of important business pages.

Why Choose PageRadar for Robots.txt Monitoring?

| Feature | PageRadar | Manual Monitoring | Other Tools |

|---|---|---|---|

| Monitoring Frequency | Daily | When you remember | Daily |

| Visual Diff View | Built-in | Basic | |

| Change History | Full timeline | Limited | |

| Multi-Site Support | Unlimited | Time consuming | Extra cost |

| Pricing | Starting at 29€/month | Free but unreliable | $80+/month |

Simple pricing

Start Monitoring Your Robots.txt Today

No credit card required. Cancel anytime.

Need Custom Pricing?

Large volume? Special requirements? We offer tailored solutions for enterprises and agencies.

- Volume discounts available

- Custom integrations

- Priority support

- Dedicated account manager

FAQ

Common Questions

Everything you need to know about robots.txt monitoring.

We monitor your robots.txt files daily. This means any changes are detected within 24 hours maximum, giving you rapid notification of potential SEO issues.

We track all changes including User-agent directives, Disallow/Allow rules, Sitemap declarations, Crawl-delay settings, and any other robots.txt directives.

Yes! When you add a domain without a robots.txt file, we'll automatically detect when the file is created and start monitoring it, sending you a welcome notification with the initial content.

You can monitor unlimited domains on all plans (within the limits of your plan).

Yes, we store the complete history of all robots.txt changes for each monitored domain. You can see the evolution of your robots.txt file over time with timestamps + visual diffs.

We'll immediately alert you if your robots.txt file returns a 404 error or becomes inaccessible. This helps you catch issues quickly.

Absolutely! During site migrations, robots.txt rules can accidentally change when moving to new hosting or CMS platforms. Our monitoring ensures you catch unintended changes that could block search engines.