Should You Block AI Bots ?

AI bots are everywhere.

GPTBot, ClaudeBot, Google-Extended... These crawlers are scanning your site right now to train language models or power AI-generated answers. The question isn't whether you're affected, but what you should do about it.

What are AI bots and what do they want?

Unlike traditional crawlers like Googlebot that index pages for search, AI bots serve a double purpose:

- Training data collection: GPTBot, ClaudeBot, and CCBot download your content to train their language models.

- Real-time retrieval: ChatGPT-User and Perplexity-User fetch your pages when a user asks a question, potentially linking back to your site.

This creates both opportunity and risk.

Why some sites block AI bots

The main reasons publishers choose to block:

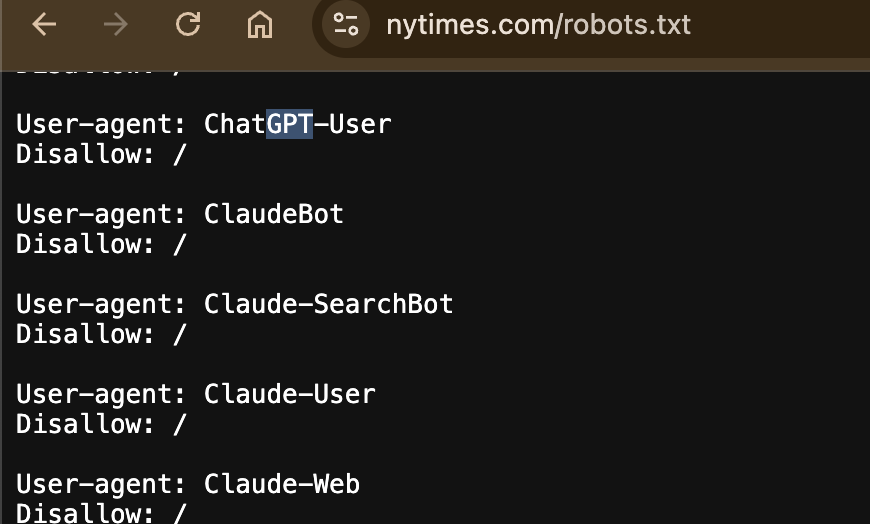

- Intellectual property protection. Media and publishers don't want their content training AI models without compensation. The New York Times, Reuters, and Associated Press have all blocked these crawlers.

- Traffic preservation. When AI answers a user's question directly, they don't need to click through to your site. Fewer visits = less ad revenue.

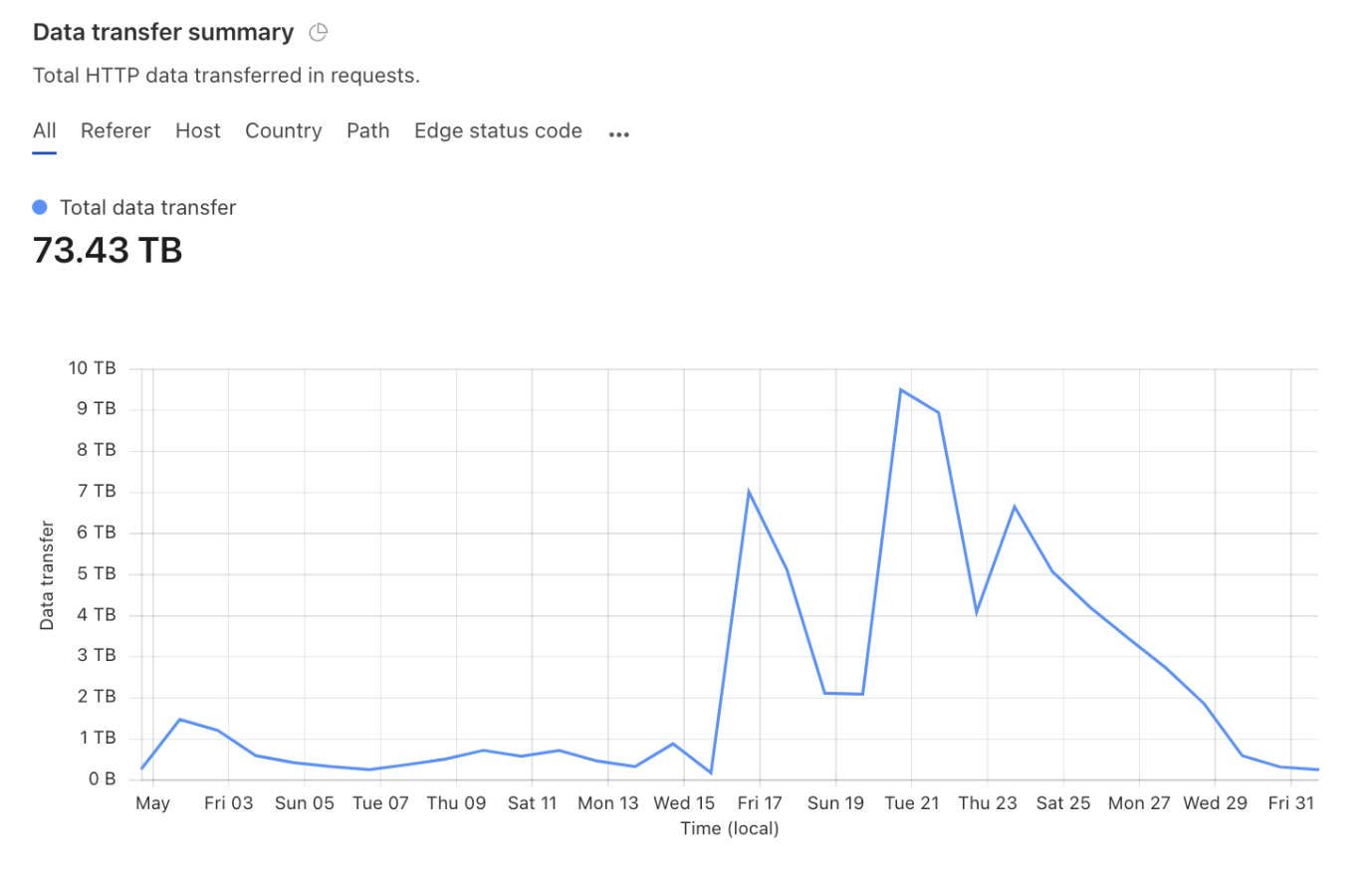

- Server load. Some AI crawlers are aggressive. Read the Docs reduced bandwidth by 75% (from 800GB to 200GB daily) by blocking AI bots, saving them thousands of dollars per month.

- Analytics contamination. AI crawler traffic can skew your data, making it harder to understand real user behavior.

Why you probably shouldn't block AI bots

Here's the counterargument.

AI visibility is the new SEO. AI-referred traffic grew 527% between January and May 2025. ChatGPT has 800+ million weekly users. Google's AI Overviews appear in 16-21% of searches. Block these bots and you'll lose visibility to this traffic channel.

Higher-quality traffic. Visitors from AI platforms convert 4.4x better than organic search visitors. They've already been "pre-qualified" by asking specific questions before clicking through.

You're cutting yourself off from the future. Blocking AI bots is like blocking Google in 2005. Search is becoming conversational and AI-driven. Opting out now means losing market share tomorrow.

The data is already out there. Models trained on 2023-2024 data may already include your content. Blocking now only prevents future updates and real-time citations.

Who should consider blocking

Blocking makes sense if:

- Your content is paywalled or premium (subscriptions are your core revenue)

- You're a major publisher dependent on ad revenue from page views

- You're in a legally sensitive industry (healthcare, legal, finance)

- Your servers are genuinely struggling with crawler load

Who should allow AI bots

Allow them if:

- Brand visibility in AI responses matters to you

- You want traffic from AI search (it's growing fast)

- Your content is already publicly available

- You're building thought leadership in your space

The middle ground: selective blocking

You don't have to choose all or nothing. Block training bots while allowing assistant bots that might send traffic:

# Allow search and assistant bots (can drive traffic)

User-agent: ChatGPT-User

Allow: /

User-agent: OAI-SearchBot

Allow: /

User-agent: PerplexityBot

Allow: /

# Block training bots (extract value without return)

User-agent: GPTBot

Disallow: /

User-agent: ClaudeBot

Disallow: /

User-agent: Google-Extended

Disallow: /

User-agent: CCBot

Disallow: /How to block AI bots (if you decide to)

Method 1: robots.txt

Add these lines to your robots.txt file:

User-agent: GPTBot

Disallow: /

User-agent: ClaudeBot

Disallow: /

User-agent: anthropic-ai

Disallow: /

User-agent: Google-Extended

Disallow: /

User-agent: CCBot

Disallow: /

User-agent: PerplexityBot

Disallow: /

User-agent: Bytespider

Disallow: /Limitation: robots.txt is a polite request, not a security gate. Reputable bots honor it; bad actors ignore it.

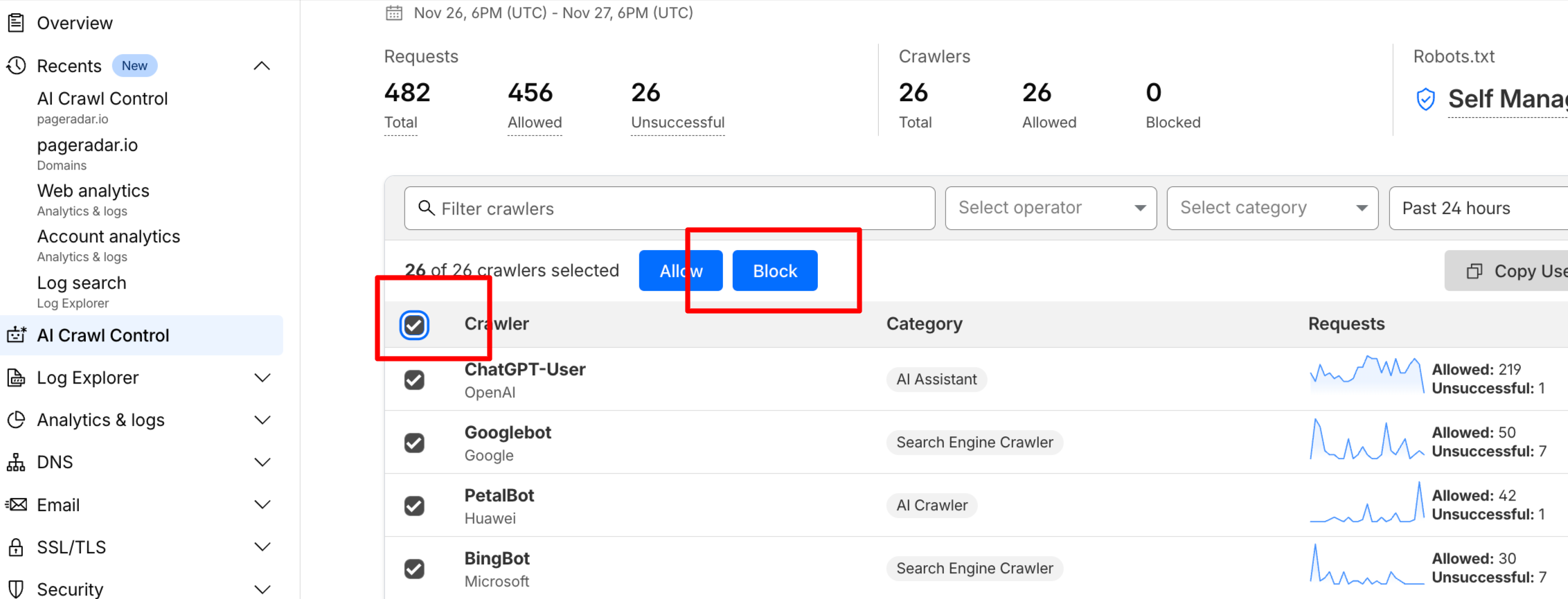

Method 2: Cloudflare One-Click Block

If you use Cloudflare, go to "AI Crawl control" → Select all and enable "Block"

Method 3: Server-Level Blocking

Use firewall rules to block known AI crawler IP ranges. More effective but requires maintenance.

AI bot user agents to know

For a complete and regularly updated list, check Cloudflare's Bot Directory or our AI User Agents List which tracks 55+ active AI crawlers.

Our verdict

Unless you have a specific technical or business reason, we advise against blocking legitimate AI bots. The visibility benefits of being indexed and cited by AI tools outweigh the risks.

The smarter strategy is to embrace the AI-powered landscape:

- Monitor your logs : see which bots are crawling your site and how often (use PageRadar's AI User Agents tool to generate custom robots.txt rules, if needed)

- Optimize your content for AI citation (clear structure, authoritative sourcing)

- Track AI referral traffic as an emerging channel

- Consider selective blocking only if you have premium content worth protecting

AI is becoming part of search. Block it, and you'll show up less.